This should not be news to you. To win in business you need to follow this process: Metrics > Hypothesis > Experiment > Act. Online, offline or nonline.

This should not be news to you. To win in business you need to follow this process: Metrics > Hypothesis > Experiment > Act. Online, offline or nonline.

Yet this structure rarely exists in companies.

We are far too enamored with data collection and reporting the standard metrics we love because others love them because someone else said they were nice so many years ago. Sometimes, we escape the clutches of this sub optimal existence and do pick good metrics or engage in simple A/B testing.

But it is not routine.

So, how do we fix this problem?

This thought was in my mind as I was reading Lean Analytics a new book by my friend Alistair Croll and his collaborator Benjamin Yoskovitz.

The book introduces a wonderful process called the Lean Analytics Cycle, which aims to help you create a sustainable way to pick metrics that matter by tying them to fundamental business problems, creating hypotheses you can test and driving change in the business from the learnings you identify.

I've asked Alistair to share his thoughts on this process with us. In this post, we’ll look at each of the four steps in the Lean Analytics Cycle in more detail. Then, for fun, we’ll look at three real-world case studies where companies put the steps to work so we can see the cycle in action.

Let's listen in as Alistair discusses the lean analytics model…

______________________________________________________

The Lean Analytics Cycle is a simple, four-step process that shows you how to improve a part of your business.

First, you figure out what you want to improve; then you create an experiment; then you run the experiment; then you measure the results and decide what to do.

The cycle combines concepts from the world of Lean Startup — which is all about continuous, iterative improvement — with analytics fundamentals. It helps you to amplify what’s proven to work, throw away what isn’t, and tweak the goal-posts when data indicates that they may be in the wrong place.

Here's a pictorial representation of the complete lean analytics cycle:

[Note: Due to the limited screen real estate, I've redone all the images you see in this post. They preserve almost all original intent, but if you read the book, or see the cycle elsewhere, please don't be surprised to see a slightly different version. -Avinash]

While the process above might seem complex, we can simplify it to four key steps that any business of any size can apply to their analytics practice.

Step 1: Figure out what to improve.

One thing the cycle can't do is help you understand your own business. That part is your job. You need to know what the most important aspect of your business is, and how you need to change it.

- Perhaps it's an increase in your conversion rate;

- Or a higher number of visitors who sign up;

- Or a greater number of people who share content with one another;

- Or a lower monthly churn rate for users of your application;

- Maybe it's even something as simple as getting more people into your restaurant.

The point is, it's a critical metric for your business. You might need help from the business owner to figure out what the metric is. That's a good thing! It means you're relevant to the business, and if your trip around the cycle is successful, you'll have helped the organization get closer to its goals.

Another way to find the metric you want to change is to look at your business model. If you were running a lemonade stand, your business model would be a spreadsheet that showed the price of lemons and sugar; the number of people who passed by your stand; how many of them stopped to buy a drink; and what you could charge. Right there you have four things that are critical to the business and one of them is ripe for improvement. This is the one metric that matters to your business right now. You're choosing only one metric because you want to optimize it.

That metric is tied to a KPI. If it's the number of people buying, the metric is conversion rate. If it's the number of invites sent, it's virality. If it's the number of paying users who quit, it's churn.

The business model also tells you what the metric should be. You might, for example, need to sell each glass of lemonade for $5 to break even. That's your goalpost. It's the target for your KPI.

So grab a piece of paper and write down three key business metrics you'd like to change. For each of them, write down the KPI you're measuring, and what that KPI should be for you to consider your efforts a success. Do it now; we'll wait.

Step 2. Form a hypothesis.

This is where you get creative. Experiments come in all shapes and sizes:

- A marketing campaign

- The redesign of an application

- A change in the way you price things

- Building shipping costs into a purchase

- Changing how you appeal to people

- The use of a different platform

- Changing the wording on buttons

- Testing out a new feature

Whatever the case, this is where you need inspiration. You can find that inspiration in one of two ways.

If you have no data, then you can try almost anything.

- Try to understand your market. Run a survey, or look at what else they do, or examine customer feedback, or simply pick up the phone.

- Steal from your competitors. If someone is doing something well, then imitate them. It's the sincerest form of flattery. Don't be different for the sake of being different.

- Use best practices. Read up on ways that companies are growing their business, from growth hacking to content marketing, and use that as inspiration.

If you have data, then figure out what’s different about the people who are doing what you want. Let’s say, for example, that you’re trying to lower the churn rate on an application. Some of your users each month don’t quit. What do they have in common? What makes your most loyal customers different from the rest? Did they all come from the same place? Are they all buying the same things?

Either way, the hypothesis comes from getting inside the head of your audience, asking them questions, or understanding what makes them tick.

The word hypothesis means a lot of different things, but in this context I like this definition from Wikipedia the best: People refer to a trial solution to a problem as a hypothesis, often called an "educated guess”, because it provides a suggested solution based on the evidence.

We’re making an educated guess about what could improve the KPI based on what we learned in step 1.

Step 3. Create the experiment.

Once you have a hypothesis, you need to answer three questions to turn it into an experiment.

First: Who is the target audience? Everything happens because someone does something. So who are you expecting to do a thing? Is this all visitors, or just a subset of them? Are they the right audience? Can you reach them? Until you know whose behavior you’re trying to change, you can’t appeal to them.

Second: What do you want them to do? Is it clear to them exactly what it is you’re asking them to do? Are they able to do it easily, or is something getting in their way? How many of them are doing it today?

Third: Why should they do it? They’ll do what you ask if it’s worthwhile to them, and if they trust you. Are you motivating them properly? Which of your current pleas is working best? Why do they do this thing for your competitors?

On the surface, these three questions—who, what, and why—don’t seem hard to answer. But they are. That’s because they require you to have a deep understanding of your customers. In the Lean Startup world, this is called customer development. The experiment will almost always look like this:

Find out if WHO will do WHAT because WHY enough to improve KPI by the Target you've defined.

This gets to the deliberate nature of the actions we want to take. You start with a great hypothesis, and you’ll get a great experiment. This also keeps you honest, because everyone recognizes the point of the activity beforehand.

In our case studies, below, you'll see that the KPIs were things like Property Bookings, Number of Engaged Users, and Daily App Use. If you have access to existing data, take some time to document what the current performance looks like. Of course, it’s possible that you don’t have access to the data (as in the Airbnb case study below.) That’s OK. We have a path for that as well.

Once you have your experiment, set up your analytics to measure the KPI against its current baseline and the goal you’ve set. Then run your experiment.

Step 4. Measure and decide what to do.

At this point, you’ll know whether your experiment was a success. This leaves us with several options:

1. If the experiment was a success, you’re a hero. Celebrate a bit, then find the next metric that matters the most and move on to the next who, what, why exercise.

2. If the experiment failed spectacularly, we need to revisit our hypothesis. It’s time to identify a new who, what, and why, based on what we’ve learned. Remember, as long as you learn from it, failure is never a “wasted” opportunity.

3. If the experiment moved the needle, but not enough to clear the goalposts, we should try another experiment. Our hypothesis might still be valid, and we can try again, adjusting based on what we’ve learned.

The underlying beauty here is that we’re being smart, fast, and iterative. We’re making a deliberate plan, measuring its results, and circling ever closer to our goal. Identify, hypothesize, test, react. Repeat.

Let’s look at some case studies that will really help to drive the Lean Analytics Cycle home. We don’t know everything about the metrics these companies dealt with — they’re private companies, after all — and in some cases we’ve estimated numbers to make the explanations more clear. But even with these changes, the examples will help make all of this a bit more real.

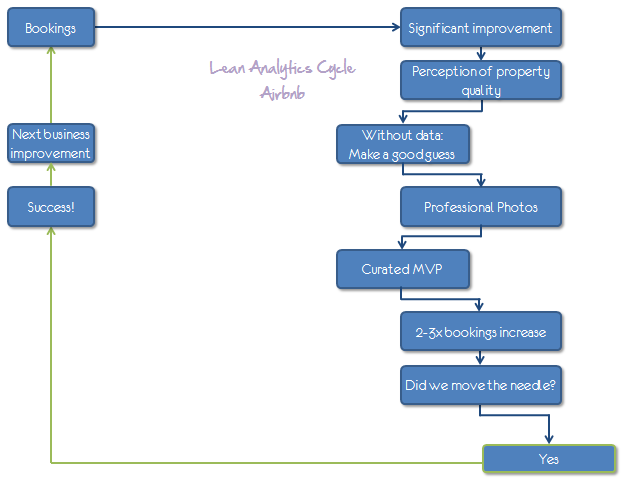

Case Study 1: Airbnb

Airbnb is a hugely popular marketplace for rental-by-owner properties. They’ve found dozens of creative ways to grow, but they’re always judicious and data-driven.

Step 1: Figure out what metric to improve

The metric they wanted to improve was the number of nights that a property was rented. Notice that this is more central to their business than simply measuring revenue: Airbnb does well if its homeowners do well, and for it to succeed, it needs listed properties to be rented often so the homeowners will stick around.

The company knew that to succeed, they needed a significant improvement in rental rates per property.

- One Metric That Matters: “Number of nights rented.”

- KPI: Property bookings

- Target: (not publicly known)

- Current level: (not publicly known)

Step 2: Form a hypothesis

We don’t know how Airbnb came up with its hypothesis. But we know it had access to property listings that rented well.

- Perhaps they had noticed that the pictures of those properties looked, to them, more professional.

- • Maybe they realized that one common complaint from renters was that the property didn’t actually look like the pictures on the site.

- Maybe they found that people would most often abandon a listing right after seeing photographs.

- Maybe they analyzed the metadata from pictures and found that there was a strong correlation between properties that rented often and expensive camera models.

However they got there, they formed a hypothesis: Properties with better pictures rent more often.

Step 3: Create the experiment

Armed with the hypothesis, it was time to create the experiment. As is often the case, having a clear hypothesis makes devising the experiment fairly easy. Their who, what, and why are as follows:

What do you want them to do? Decide to rent a property more frequently.

Why do they do it? Because the photographs look professional and make the property look beautiful.

So for them, the experiment was

Find out if travelers will book more properties because of professionally photographed listings enough to improve the property bookings by X%.

Notice that in this case, Airbnb didn’t really need any current data. It might just as easily have been a random comment over lunch that led to the hypothesis. But even if the hypothesis isn’t founded in hard data, the experiment design must be.

To run this experiment, Airbnb created what Lean Startup calls a curated minimum viable product. This is like the Wizard of Oz: most of the hard work is done behind the curtains, but the end user thinks they’re seeing a final product.

Airbnb wasn’t sure whether or not the experiment would work, so the team didn’t want to hire a staff of photographers or invest in a new part of the application. But at the same time, they had to have a real test of an actual feature.

Before we go further, there’s an important lesson to take away here. You can do this at Nordstroms, or Expedia, or Unilever. You don’t need to build a magnificent shining castle. You don’t need a beautiful beast to go out and test. You can start small, lean, and mean — with just the customer-facing pieces you want to test — and go validate (or disprove!) your hypothesis.

Airbnb’s experiment consisted of something that looked like a real feature, but under the covers was really just humans and contracted photographers. During the experiment they took pictures of properties, and then measured the KPI, comparing properties that had been photographed to those that hadn’t.

Step 4. Measure performance.

In this case Airbnb measured the bookings from the few properties that had professional photos and compared the rate of bookings with properties that only had photos taken by property owners. The result? The properties with professional photography had 2-3 times the number of bookings!

[Remember that the raw number is not the only important part, we would also measure statistical significance. Airbnb had enough data points to be confident in their results. -Avinash]

By 2011, the company had 20 full-time photographers on staff.

The graph is impressive, right? There were many other things going right with Airbnb's business and business model. But the lean process was a key contributor to improving the bookings rate. Clearly, the experiment was a success. They celebrated a bit, then went on to fix the next biggest problem in the business.

Case Study 2: Circle of Friends

Circle of Friends was a social community built atop Facebook that launched in 2007. It essentially allowed you to create a group of friends who could interact and share content, much as people do today with Google+, before such features were part of Facebook.

As a social network based on user-generated content, Circle of Friends only grew when its users were engaged. The company’s creators wanted users not only to create groups, but also to send messages within those groups, invite others, and interact with Facebook elements such as news feeds.

Step 1: Figure out what metric to improve

The founders had several measures of “engagement”, from whether people attached a picture to a post, to whether they clicked on Facebook notifications, to the length of posts they wrote. Any of these actions — and several others — constituted “engagement.” All of this rolled up into a simple KPI: number of engaged users.

While Circle of Friends’ launch was hugely successful in terms of raw attention — they had 10 million users! — engagement simply wasn’t happening. None of the metrics they used to measure the health of their communities was where they wanted it to be.

The rather nebulous “level of engagement” was a compound metric, which can be dangerous to rely on. But one of the clear metrics they tracked was “number of circles with activity”, meaning that there had been some form of interaction within a group in the past few days.

- One Metric That Matters: User engagement

- KPI: Number of active users; number of circles with activity

- Target: (not publicly known)

- Current level: Less than 20% of circles had any activity after creation

The challenge, here, was how to kickstart engagement. That’s not an easy thing to do. Fortunately, Circle of Friends had plenty of user data to mine.

Step 2: Form a hypothesis

Circle of Friends had huge volumes of information on its users and how they used the product. They looked at two groups of users: those that were engaged, and those that weren’t. Then they looked at what those users had in common. In other words, they defined what engagement meant (i.e. “someone who has returned in the last week”) and segmented users into two groups. Then they looked at other things those people had in common.

What they found changed their business. It turned out that the engaged users were much more likely to be mothers. Looking at moms who use the application:

- Messages to one another were, on average, 50% longer

- 115% more likely to attach a picture to a post they wrote

- 110% more likely to engage in a threaded (i.e. deep) conversation

- Those who were friends of the circle owner were 50% more likely to engage

- 75% more likely to click on Facebook notifications

- 180% more likely to click on Facebook news feed items

Before we look at what they decided to do, stop for a minute and realize that simply having data isn’t that useful. Circle of Friends had all of this information. But asking the right questions of your data is a superpower. In this case, they asked, “what do engaged users have in common?” and it absolutely changed the destiny of their company.

From their data, they formed a hypothesis: If we focus only on moms we’ll have the engagement we need.

Step 3: Create the experiment

Their who, what, and why were as follows:

What do you want them to do? Engage with other users, invite new users, and create content.

Why do they do it? Because they find interaction with others rewarding and compelling.

In the end, the founders made a huge bet — the kind of bet that only startups with nothing to lose can make. They decided to completely rebrand the company as Circle of Moms, and focus everything on attracting and engaging mothers.

So their hypothesis was:

Find out if moms will join, relate and engage in groups because of a community targeted specifically at them enough to improve the number of active circles and engaged users by X%.

They were betting that if they stopped trying to please everyone, and instead focused specifically on mothers, they’d have a higher percentage of engagement, which would in turn lead to the company’s overall success.

Step 4. Measure performance

It took a few months to carry out the experiment, since it involved re-launching the entire company. The initial result was a huge drop in users, of course, since most of the communities weren't made up of mothers.

They lost millions of users.

They feared this would happen, but they knew that if they didn't get engagement where it needed to be they might as well close up shop.

If they'd been focusing on the wrong KPI, such as number of subscribers, they'd have quickly concluded that the experiment was a disaster. But because they'd been deliberate about their hypothesis, they knew that the most important metric was engagement. Sure enough, the users that remained were engaged. Overall engagement and active circles climbed significantly, to the point where the business model was healthy.

Of course, having achieved the engagement they sought, the next experiment was about whether they could grow a more narrowly focused community to a decent size. By late 2009, that experiment was a success, too; they'd climbed back up to 4.5 million users, with strong engagement.

Case Study 3: High Score House

High Score House is a tool that lets parents manage household chores and rewards for their kids. The founders envisioned a world where parents and children sat down each day to identify chores, keep score of what the kids have done, and drool over rewards they might claim.

Step 1: Figure out what metric to improve

In the early stages of launch, the most important metric was the number of families who fired up the app at least once a day. Those who did were considered "active", while those who didn't were assumed to have abandoned the application. Unfortunately, while High Score House was getting installations and great press reviews, users weren't active.

No active users, no business.

- One Metric That Matters: "Active families"

- KPI: Percent of families who've used the app in the last 24 hours (AKA "percent daily use")

- Target: Over 60%

- Current level: Below 20%

Step 2: Form a hypothesis

The founders went around the Lean Analytics Cycle several times:

- They tried changing the look and feel of the application.

- They tried sending people messages urging them to come back to the application.

- They tried adding new features.

None of it worked; fewer than 20 percent of families who were part of the early release used the app each day.

Each time, their hypothesis went something like, if we send a reminder email to parents each day, the percent daily use will exceed 60%.

Step 3: Create the experiment

The High Score House team went dutifully through the motions of the Lean Analytics Cycle. For the notification hypothesis, the who, what, why looked like this:

What do you want them to do? Use the app daily.

Why do they do it? An email will remind them to launch the app and sit with their kids, making it a daily activity that becomes part of their routine.

In other words, their experiment (for the notification model) was:

Find out if parents will use the app daily because we remind them to do so enough get the percent daily use to 60%.

They ran the experiment.

Step 4. Measure performance

The experiment disproved the hypothesis. Email reminders didn’t measurably improve percent daily use. Like many of the other changes the team had tried, they couldn’t move the needle.

At this point, Kyle, the founder of the company, decided it was time to ditch the data and go qualitative. He picked up the phone and started talking to users. And this simple, messy, unquantified, anecdotal process yielded an amazing insight:

Many families would set aside a specific day of the week to plan their chores and count their rewards.

Kyle was floored. Families were getting value from the product. They were just using it in a different way from what the designers intended. Kyle now had new information which he could use. But it wasn’t for another experiment. This time, Kyle was going to move the goalposts/target.

Changing your target should never happen lightly. It’s only OK to change the goal when the customers have given you new, validated learning from which to make the change. In this case, since your goal is tied back to your business model (remember the $5 glass of lemonade?) changing the goal means changing your business model

In this case, HSH was going to have to change the way their application was designed, making it more suitable for week-by-week views and a different usage pattern. But by moving the goalposts, the team had a new KPI: Percent of families who've used the app in the last 7 days (AKA "percent weekly use"). And with this new definition, over 80% of the early adopters were using the application regularly.

We’ve seen three case studies. The first one, about Airbnb, shows a straightforward example of a hypothesis and an experiment without data. The second, about Circle of Friends, shows an example of an experiment based on data. And the third one, about High Score House, shows how to adjust the goalposts when they aren’t set properly in the first place. All three are examples of the Lean Analytics Cycle at work.

______________________________________________________

Amazing, right? Life's pretty cool when you have a process!

For me the lean analytics cycle is, at it's very core, about driving change quickly, regardless of how much you know about your users or how much data you have. It discourages waiting for perfect data or perfect understanding of every variable or perfect understanding of what the business is trying to solve for (because in all three cases you will never get perfection!).

While it was developed with startups in mind, you can see the attraction of speed, learning and constant improvements for businesses of any size.

It is important to note that the cycle, and the Lean model as a whole, isn’t advocating chaos. They might deal with uncertainty, but they're not random.

There is great deliberation in step one to identify the KPI that will be a guiding light for us (embrace "One Metric That Matters"). There is a lot of deliberation in step two on ensuring that we have an optimal hypothesis to work from. Then figuring out how we are going to experiment with deep clarity from defining the who, what, why. Finally, understanding whether we actually succeeded by measuring performance. Then, then … you learn. You internalize. You win.

And how can you be immensely incredible at this? Go get Alistair and Ben's book Lean Analytics .

As always, it is your turn now.

Do you follow a structured process inside your company to ensure data-driven decisions are being made constantly? If you were to apply the Lean Analytics Cycle, what challenges might cause problems inside your company? As you look at the cycle, is there anything that might be missing, or tweaks you would make to improve it?

Please share your thoughts, critique, ideas, and musings via comments.

Thank you.

Via

Via

Thanks for sharing a great article Avinash… I am also starting a new business, hope with your article i may also succeed.

Very well written with graphics and very easy to understand.

Another great post Avinash!

It's would be great if you write the another post like this but with KPI examples and some specifiec reports from the GA.

Which reports you should look at first, which issues is most important etc.

"Google Analytics Case Studys From The Pro's Eye View"

You have a title. Go for it!

:)

Shuki: There are more posts on this blog than you want on those two topics.

Here are a couple on picking KPIs:

~ You Are What You Measure, So Choose Your KPIs (Incentives) Wisely! The post makes the case for these KPIs: Economic Value, Task Completion Rate, Visitor Loyalty, Share of Global Search, Conversation Rate, 30-day Actives etc.

~ Best Web Metrics / KPIs for a Small, Medium or Large Sized Business Perhaps the best collection of starting points on picking metrics, see the summary picture at the end.

On reporting…

~ Google Analytics Tips: 10 Data Analysis Strategies That Pay Off Big! How to use standard reports to find golden insights. The path to analytics nirvana goes through custom reports and advanced segments.

~ Google Analytics Custom Reports: Paid Search Campaigns Analysis You can learn from my PPC reports, just download them, how to do custom reporting better.

This should get you going no implementing the lean cycle with your clients!

-Avinash.

Good post. If i am correct, we focus on improving only one KPI at a time in case of lean analytics cycle. So can i conclude that lean analytics cycle is better (lighter, faster and more practical) than the big and heavy WAMM where we pickup and analyze several KPIs and try to solve several problems at once. Not sure how the acquisition, behavior and outcomes buckets will fit into the lean analytics cycle and why Alistair has not taken them into account. But I really like the idea of focusing on only one problem at a time.

I like the whole concept behind lean analytics cycle but would consider changing step 1. Instead of first figuring out what to improve, i would try to determine what the problems are through qualitative and quantitative data. This will help me in determining what issue should i be fixing.There are usually 'N' objections/problems with any business and nobody has time and resources to fix them all. So i need to prioritize what needs to be tested first, what needs to be tested second and so on.

This is different than the 1st step proposed by Alistair in the way that i am not relying on my own understanding of the client's industry/business or on the client to figure out what the problem is, what needs to be improved or what the metric should be. Instead from the very start i am asking my target audience what there problems are.This will greatly increase my probability of forming a correct hypothesis and experiment later on.

Let me give you one real life example here. One of my clients (who is in the drug rehab industry) had very high cost per acquisition and they weren't able to figure out why. They hired me to fix this problem. I was brand new to the drug rehab industry and was more clueless than the client about this 'why'. I had no idea what to improve. To make the matter worst all of the conversions and users interactions were taking place offline via phone calls.

So quantitative data was pretty much useless for me. I need to figure out from where i should start, which issues i should focus on and that too fast. Being a consultant, we don't get the luxury of studying a business for months before forming strategies or selecting KPIs. So i ran a survey on the client's website. I surveyed the call center staff and asked them to send me the list of all the top objections raised by the callers so far.

The number one objection was 'location of the center'. My client was advertising in the whole of US and majority of callers were looking for drug rehab center close to their area.The survey data was enough to form the hypothesis that the drug rehab business is very much local and they would be better off directing their marketing efforts only in California (the location where the client's center is located).Even my client who has been in drug rehab business for years had no idea about the locality of his business.

So IMHO first step should be: Figure out the problems that you need to solve by creating a list of all the objections raised by your clients and then decide what needs to be improved first, what needs to be improved second and so on.

Himanshu: This is one of those "and" situations, rather than a "or" situation.

If I were to walk into a large company like GE or Chase or Intel, or even a medium sized company like Warby Parker or Bonobos, and say "You should focus on one metric at a time." I'll be fired in five seconds.

Big businesses can learn a lot more from startups, and their attitude, and figure out how to be a lot more agile. They are also solving for different things. You can't only do Lean in a big (or even a medium sized) company. There are too many problems to solve and they have lots of people and money to spend to solve them all together.

But directly applicable from Lean Cycle is the process companies can use to choose new metrics, how to experiment on the bleeding edge with new ideas (this is almost non-existent in large companies), how to kill things that don't work (bigger companies tend to accumulate), how to be 50x faster than their current speed, and so much more. I hate the fact that we don't experiment more, not just with content etc, but with KPIs and their direct impact on the business – the Lean Cycle makes this easier for large/medium companies.

I see Lean Cycle as not an "either/or", rather as a very important "and."

That is harder for us (Analysts, Consultants, Business Folks) because we have to master multiple things at a time. But that is how one becomes a massive success. :)

-Avinash.

Himanshu,

Thanks for your thoughtful reply. I agree completely that for businesses which are already running, it can be hard to find one thing to focus on, often because the business is complex. Within a single project, problem area, or product, however, the upside of focus often overcomes the downside of myopia.

I didn't get into it in this post (it was pretty long as it is!) but in the book we identify five distinct stages companies all go through: Empathy, Stickiness, Virality, Revenue, and Scale. The Empathy stage is all about getting inside the head of your customers, at first qualitatively (through interviews and customer discovery) and then qualitatively (through scale.) A good tool for helping this process is Ash Maurya's Lean Canvas, which forces you to write down the business problem as a set of simple statements.

You do, however, need to be wary of overfitting to your current customers—something covered abundantly in The Innovator's Dilemma. For "intrapreneurs" tilting at corporate windmills from within, this is a constant challenge. "Evolutionary" change is the domain of business-as-usual analysts, and while they can learn from the Cycle, they're still playing by the rules. "Revolutionary" change requires re-writing the rules. That means that rather than doing what customers say they need, you're doing what customers will need one day based on their current behaviors.

Predicting the future yields huge benefits if you get it right, but is extremely costly if you build the wrong thing. That's why the Lean Startup advocates creating the minimum product, service, or feature necessary to confirm—or disprove—an idea.

Ultimately, you're right that hypotheses can come from all sorts of places. It's how we turn those hypotheses into informed learnings and actions that matters.

@avinash Thanks for the clarification. You wrote about 'metrics life cycle' which is very much similar to the lean analytics cycle in the way that both focus on continuous experimentation and improvement. So what exactly is the advantage of lean analytics cycle over the metrics life cycle. They both look same to me except that you used the word 'define' and the analytics cycle used the word 'hypothesis'. I must be missing something here. Also how similar or different 'lean analytics' is to agile analytics?

@Alistair Thanks for the input. My comments are based on what i read in the post. Surely you must have thought and covered various business aspects in depth in your book. Looking forward to read your book and probably another post on 'lean analytics cycle' with tons of great examples :)

Avinash a lot is discussed about Testing your Web Analytics solution…but here is a prob…

Imagine a client running short on budget for his web applications…he has limited funds for his many ambitious projects one of which is definitely Web Analytics…. how is he suppose to carry out A/B testing when he can hardly afford building A in the first place… aren't there any cost effective ways of testing your solutions?

And how about in depth application of decision sciences/hypothesis testing and some easy mathematics to test a small sample may be just a team of few employees rather than real time customer to test the solution designed.

S M: Three things are important in winning: 1. Time. 2. Effort. 3. Money.

I would postulate that at least two are required to win big. If only one is available you can scrape by. If none are present, everyone loses.

To your question… If the client does not have budget, but is willing to put in some time and effort then there are free testing solutions she/he can use. Or, as Alistair points out, you just make changes in production and see what happens. But you need #1 and #2.

If you have very little traffic you have a choice. Math won't help you. There simply is not enough to compute statistical significance. So you can absorb the risk (that you could be wrong) and show versions (paper mocks or wire frames) to current users or potential users (there are many online usability testing options) and use that information.

Or you can conduct heuristic evaluations (with competitive analysis) which only need a few people inside your company, and use that to implement the lean cycle.

-Avinash.

Thank You so much for your time. It was the first time I visited your blog and cannot stop myself from going through all your articles. Very informative..thnks..!!

S M,

Let me give you an example. The Houston airport once had a baggage problem. Passengers consistently complained that their bags took too long to make it from the plane to the carrousel. The average bag took 8 minutes!

So they worked hard to improve things, and managed to reduce the time it took bags to an average of six minutes. Then they checked the surveys: nothing had changed.

Frustrated, someone tried parking the planes farther from the baggage claim area. Complaints dropped to zero.

The problem was the cause of the complaints wasn't "bags take too long," it was "I don't like standing around waiting." Apparently walking was fine.

Now the lesson here is this: if you're an analyst for the Houston airport, you have two experiments: should we move the planes, or retool the baggage systems. One of those two options is much cheaper than the other, so you should try that first. This is part of choosing which hypotheses to test—formulating creative experiments that can be done on time and in budget. This is where much of the creativity of "knowledge workers" will belong in an era of so-called Big Data.

I should mention that this article has the whole story:

http://www.nytimes.com/2012/08/19/opinion/sunday/why-waiting-in-line-is-torture.html?pagewanted=all

Alistair Croll…Thank You so much for the article..Great story..and loved d way u drew relevance here..wud like to hear more from you about Lean Analytics…Please if possible suggest some ref material for us..Neways thanks once again for your time and wish u gud luk..let the passion in you prevail..!!

Well, the book is a good place to start ;-) but Ben and I also put our content on Slideshare (under Leananalytics) if you want to look there.

Well already ordered a copy of your book..waiting for its delivery.:)

Thanks Alistair…

What a great way to start a Monday, Avinash. We've been doing exactly this lately and are beginning to get pretty decent at it, too! Discipline in process is so critical, and yet so few see it as key to any marketing strategy itself.

In the last quarter alone we've had to work to get everyone up to speed on the differences between things we could test (e.g., buttons!) and a true hypothesis (e.g., how can we make someone's life easier/better/more enjoyable?). Now we're tackling MVT testing and carefully filling our matrices with what we feel are only the strongest potential ideas.

I find myself constantly coming back to the "Analtyics Trinity" concept of yours from back in the late 2000's. Yes, I know today is all about multiplicity, but the Trinity did such a good job of stressing the importance of quantitative and qualitative data that it stuck with me. It's been extremely gratifying for me lately to dig deeper into both types of data to pull forth the insights students really care about and to have those be some of the primary drivers behind our experiments.

Life is good, and the results are even better. Thanks for a great post!

Thanks for the feedback, Josh. If you're doing this with a startup we'd love to hear more; Ben and I are always looking for case studies.

Thanks a lot Avinash.

As always very clear, enlightening and actionable.

Hi Avinash,

Great to see this process again in other peoples mind. I think I publish a similar cycle just a year ago on slideshare.(german only sorry)

Link: http://de.slideshare.net/gradlinigcom/web-analytics-mit-system

Great that other people also think about the processes that could improve the overall outcome of our work as analysts.

It is the maybe the most important thing to 1st get the process and 2nd get the support of the affected coworkers or teams.

No matter how good your analysis are if you don't do it over and over again, which leads to a process – and don't get others to support you … you won't succeed.

Great article as always Avinash.

Best regards

Uli

Ulrich: It is hard to understand your slides (sadly I don't speak German!) but it is very easy to understand slide 10 and 11 in your deck. It reminded me of this picture from a Dec 2007 post, Web Metrics Demystified:

I concur 100% with you on getting support. One of my strategies is to make superheros our of our clients. As I succeed with my marketing and analytics strategies, every single time I try to figure out how to step out of the light and make sure a business person / owner is being celebrated. If they are celebrated we all win (including getting support :)).

-Avinash.

Hi Avinash,

I just did a quick translation of the slides. I must apologize for the bullet points ;o)

http://de.slideshare.net/gradlinigcom/a-systematic-approachtowebaanlytics

I appreciate your work and always recommend your books, to clients and employees.

My slides might seem to be a little redundant now knowing your cycle…

Best regards

Uli

Ulrich,

Thanks for sharing. I can't follow much of it (no German here either, unfortunately) but the cyclical nature of these things is critical. Many people think once they optimize something they're done. They're not—they have either now created a new thing to optimize, or they've got more improvement of their initial metric to do.

We say in the book that "metrics are like squeeze toys." That is, when you optimize one, the next one bulges out obviously. Fix site traffic? Now you want conversion. Fix conversion? Time to tackle shopping cart size. Shopping cart good? Maybe you have too many returns. And so on.

Alister,

you are so right … I like the squeeze toy analogy.

Its like running in circles. And sometimes the highest potential for optimization is 'offline'.

But sometimes it is ok to tell your client – we are done for now … now go and improve your products, your services. Based on analytics … Grow your market and then come back and optimize the online business.

I hope my translation of the slides will serve you too.

best regards

uli

I had the opportunity to read this book and it was very interesting.

For me, it´s about agile marketing, analytics, action and really focused in customers.

Thanks

Hey Alistair and Avinash …

… Great article!:)

Regarding SME vs large company lean process application I would argue that in my experience Large companies are in dire need of simplifying the testing / reporting process as a lot of them are in startup / early stage if we look at the web analytics part of the business. Unfortunately I have seen a lot of large, hard to read reports which were done because they have to be done with no single actionable insight and and large because all other reports are … large. Going back to basics – focusing on single metrics and solving simple business problems one at a time was the key with some of the projects I worked on – finally things got measurable (cause / effect).

@Avinash I completely agree with what you said "There are too many problems to solve and they have lots of people and money to spend to solve them all together." – I have an impression that this really works only in companies with a good measurement framework in place

And again great article – keep 'em coming!

Zorin: We need simplicity, and we need focus.

In a recent post, Convert Complex Data Into Simple Logical Stories, I'd tried to make the case, using an example, of how with very little effort we can go from a random data puke (pretty much all current reporting) to something impactful.

I'm also a fan (as is obvious in this post) of having a structured approach to driving simplicity, focus and actionability for medium/large sized businesses. Hence my adoration for the Digital Marketing & Measurement Model framework.

-Avinash.

Excellent and actionable.

Thank you.

Great Post Avinash!

I've a question for you… How would I setup Analytics goals for a dynamic PHP page where the content is always changing?

Thanks

Abhishek: You have several options.

The best option for complex GA requests is to hire a GACP to go through the requirements, evaluate your precise needs and recommend the right path. You'll find a list here: http://www.bit.ly/gaac

-Avinash.

Thanks Avinash… :)

Hey Avinash,

I just realized that the who, what and why questions are very similar to the process I'm dealing with the data analyzing method, your ABC. Who= Acquisition, what=Behavior, why=Conversions.

Connecting the dots here is a nice thought…

Yehuda: They are a little similar, though in different contexts. In the lean context I think Who, What, Why works better.

In the end there are many roads that lead to Nirvana. Some choose Jesus, some choose Krishna and some hack their own path. All good. :)

Avinash.

Awesome Article Avinash.

Using lean principle is definitely the way forward for all successful companies.

Hi,

Thanks for this great article which complete The Lean Startup!

Could you please help me to understand how Circle of Friends measured this :

"What they found changed their business. It turned out that the engaged users were much more likely to be mothers. Looking at moms who use the application:

75% more likely to click on Facebook notifications

180% more likely to click on Facebook news feed items"

Here, news feed items are page posts or ads only displayed in the news feed ? Do you have any tool to extrat this data or you have to analyse the facebook page of all people who click on your posts?

Thanks a lot,

Corentin

Corentin,

We go into a lot more detail on the Circle of Friends example in the book's case study. Remember that this was a few years ago—before there was good group functionality, and before Google Circles had similar tools. So most of their metrics don't apply to the current model of Facebook data any more.

Circle of Friends was a Facebook app, and had built a significant amount of internal analytics and instrumentation to capture these actions, because they were central to user engagement. If you're an app developer, you can get this kind of instrumentation; but much of it isn't available to someone who's just running, say, a Facebook page.

I've pasted the full text of the case study from the book below, for your reference. If you want to know more, then the book is the best place to start.

A.

—————

Circle of Friends was a simple idea: a Facebook application that allowed you to organize your friends into circles for targeted content sharing. Mike Greenfield and his co-founders started the company in September 2007, shortly after Facebook launched its developer platform.

The timing was perfect: Facebook became an open, viral place to acquire users as quickly as possible and build a startup. There had never been a platform with so many users (Facebook had about 50 million users at the time) that was so open to reaching them.

By mid-2008, Circle of Friends had 10 million users. Greenfield focused on growth above everything else. “It was a land grab,” he said. And Circle of Friends was clearly viral. But there was a problem. Too few people were actually using the product.

According to Mike, less than 20% of circles had any activity whatsoever after their initial creation. “We had a few million monthly uniques from those 10 million users, but as a general social network we knew that wasn’t good enough and monetization would likely be poor.”

So Mike went digging.

He started looking through the database of users and what they were doing. The company didn’t have an in-depth analytical dashboard at the time, but Greenfield could still do some exploratory analysis. And he found a segment of users—moms to be precise—that bucked the poor engagement trend of most users. Here’s what he found:

• Their messages to one another were on average 50% longer

• They were 115% more likely to attach a picture to a post they wrote

• They were 110% more likely to engage in a threaded (i.e. deep) conversation

• They had friends who, once invited, were 50% more likely to become engaged users themselves.

• They were 75% more likely to click on Facebook notifications

• They were 180% more likely to click on Facebook news feed items

• They were 60% more likely to accept invitations to the app

The numbers were so compelling that in June 2008, Mike and his team switched focus completely. They pivoted. And in October 2008 they launched Circle of Moms on Facebook.

Circle of Moms proved to be extremely popular. By late 2009, Circle of Moms had 4.5 million users and engagement continued to be strong.

The company went through some ups and downs after that, as Facebook limited applications’ abilities to spread virally. Ultimately, the company moved off Facebook, grew independently, and sold to Sugar Inc. in early 2012.

Nice article, thanks.

A lot of businesses I deal with, in fact the majority, do not know their KPI's. They dont know what to measure in the first place. I am a great believer that if you can not measure it you can not manage it.

I also think a process of continuous improvement is vital, so would really advocate a process such as this.

Great read Avinash! Going to get the book soon as well. But in the mean time, question for ya.

How long would one have to run a contest to acquire enough feedback to gain insights that you can trust? (What's the minimum amount of time you run an experiment for before making a conclusion?)

Example: Running an experiment for a client with LinkedIn advertising over a month lead me to the conclusion not to recommend going forward with the advertising based on our pre-determined kpi's. Is that even long enough though? I mean, how long would you set up small experiments such as LinkedIn advertising, etc.? Or does it have nothing at all to do with the length of time but how concentrated the budget?

Your help is greatly appreciated. :)

@jephmaystruck

Jeph: When you run experiments the duration is determined by the time period required to get statistically significant results. And that typically depends on:

1. How different each variation is (the bigger the differences, typically, the faster you can get results).

2. How many participants are in both versions (A and B).

You can take your inputs and compute significance using one of many calculators available on the web for free. Here's a Google Search: http://goo.gl/OE4xc

You can also checkout my article on statistical significance and download a excel calculator here: Excellent Analytics Tip#1: Compute Statistical Significance.

-Avinash.

Wow, you put together a lot of insightful information here and I really like the way you arrange the diagrams. Really cool!

Now I understand how important is to measure the results we are achieving – even in an early stage. If you are testing something you have to analyze where you are going to get with that test. The validation is also so important!

Really thorough analysis, thanks for sharing.

Cleaning up the diagrams was all Avinash!

The ones in our book are much messier. ;-)

Glad you liked it.

Nice Blog.

Thank you for sharing.

Very good blog post, thanks for sharing Avinash!

Looking forward to see you again in WSI conference:)

Kristjan

That was amazing! Thank you for sharing the knowledge! :)

Cheers from Brazil.

Fell out of bed feeling down.

This has brightened up my day!