Often we present data without thinking about it too much.

Often we present data without thinking about it too much.

We might actively think about the metrics we are computing (and avoid rookie analysis mistakes).

But it is rare that we, "Web Analysts", actually think, I mean think, about the story we are telling.

I think that's because that is not our job (I mean that in all seriousness).

Our job is to report data. On good days it is to understand and segment and morph and present analysis.

But we don't think about the implications of the data in a grander context and we don't think about the role we can play in connecting with the Business, the Marketers and be so bold as to try and change behavior of decision makers. Change company cultures.

This blog post is a short story about my small attempt at changing the culture and setting a higher bar for everyone. Using data.

The Use Case:

The data in question was survey data. This one was specifically about a day long conference / training / marketing event for current and prospective customers.

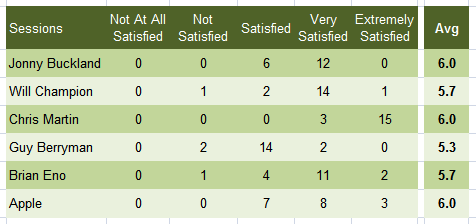

On a five point scale for each Presenter the Attendees were asked to rate "how satisfied were you with the presentation and content".

Quite straightforward.

Here are the results:

But you can also imagine getting this kind of data from your free website survey, like 4Q from iPerceptions ["Based on today's visit, how would you rate your site experience overall?"].

Or if you use free page level surveys from Get Satisfaction or Kampyle ["Please share your ratings for this page."]

In those cases you would analyze performance of content or the website.

The Data Analysis:

On surface this is not that difficult a problem to analyze.

Here is a common path I have seen people take in reporting this data, JAI! Just Average It! :)

The actual formula is to take the average of the last three columns (satisfied through extremely satisfied).

This is ok I suppose.

I find people have a hard time with smaller numbers and then you throw in the decimals and you might as well call it quits.

Your boss, Bruce, eyeballs this and says: "Looks like everyone performed well today, let's uncork the champagne."

Meh!

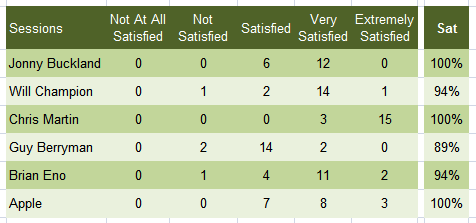

Those a bit more experienced amongst you know this and what we might see from you is not averaging but rather a more traditional Satisfaction computation.

The formula is to add the three ratings (satisfied through extremely satisfied) and divide that by the total number of responses. For Jonny: (6+12+0)/18

A bit better from a communication stand point.

6.0 is a number hanging in the air naked, without context, and hence is hard to truly "get".

100% on the other hand has some context (100 is max!) and so a simple minded highly paid executive can "get" it. Jonny, Chris and Apple did spectacularly well. Will and Brian get a hug, Guy was great (come on, 89% is not bad!!).

The Problem.

Well two really. One minor and one major.

The minor problem is (as you saw in Guy's case immediately above) percentages have a certain nasty habit of making some things look better than they are. From a perception perspective.

My hypothesis is that in general human beings think anything over 75% is great.

So maybe we should not use percentages.

My major problem is that this kind of analysis:

- rewards meeting expectations

- does not penalize mediocrity

Both are a disservice in terms of trying to make the business great. I have to admit they are signs of business as usual, let's get our paycheck attitude.

Think of mediocrity. Why in the name of all that is holy and pure should we let anyone off the hook for earning a dissatisfied rating? So sub optimal!!

Consider "meeting expectations". I was upset that our company was not shooting higher. Accepting a rating of Satisfied essentially translates to: "as long as we don't suck, let's accept that as success".

What a low bar.

I believe that every business should try to be great. Every interaction should aim to create delight. It won't always be the case, but its what we should shoot for.

And its what we should measure and reward.

Why?

Because our way of life should be to create "brand evangelists" through customer interactions that create delight.

You like us so much, because we worked so hard, because we set ourselves such a high bar, that you will go out and tell others. Be our Brand Evangelist.

Why?

So we don't have to do that.

The Solution.

Now it is very important to point out that worrying about all of the above was not in my job description. As the Manager of a small team of Analysts (or as an Analyst) I am supposed to supply what's asked for (sure with some analysis).

But I made two major changes to the calculation, and one minor.

- Partly inspired by the Net Promoter concept I decided to discard the Satisfied rating.

When we spend money Marketing (/Sales / Teaching / Advocating) I am aiming for delight. - Decided to penalize us for any negative ratings (even if slightly negative).

- Index the results for optimal communication impact.

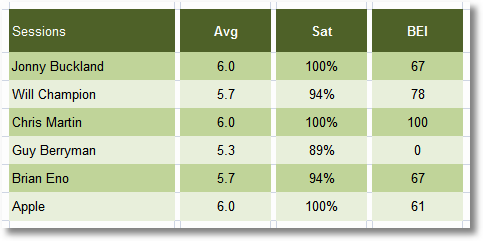

I call the new metric: Brand Evangelists Index. (Ok so its a bit wordy.) BEI.

The actual formula applied was:

{ [ (Very Sat + Ext Sat) – (Not Sat + Not At All Sat) ] / # Responses } *100

The Results.

Here's what the success measurement looked like:

The result was a radically different understanding of quality and impact of each Presenter.

Not obvious?

Check out all three measures next to each other:

Superyummylicious!

You can see how the Brand Evangelists Index separates the wheat from the chaff so well.

Compare Jonny's scores for example. Pretty solid before, now a bit less stellar.

In fact Will who initially scored worse then Jonny is now 11 points (!!) higher than Jonny.

That's because the BEI rewards Will's ability to give a "delight" experience to a lot more people (as should be the case).

Compare the unique case of Guy Berryman.

In other computations Guy was dead last but there was not much difference between him and say Will and Brian. Just a few points.

But the Brand Evangelists Index shows that Guy was not just a little bad, he was badly bad.

Sure he got a couple bad ratings but Guy failed miserably at creating delight.

He failed at creating Brand Evangelists.

And if we invest money, in these times or in good times, we demand more. Guy can't do, or has to do a lot better.

Note that Apple could also use some mentoring and evolution.

The Outcome.

Initial a few people said what the freak! Some stones were thrown.

But I took the concept of the Brand Evangelists Index two levels higher and presented it to the VP and the CMO.

They adored it.

The reasons were that the Brand Evangelists Index

- demanded higher return on investment

- it set a higher bar for performance and

- it was truly customer centric

The BEI became the standard way of scoring performance in the company.

[In case it inspires you: That year I received the annual Marketer of the Year award (for the above work and other things like that). Imagine that. An Analyst getting the highest Marketer award!]

The Punch Line.

When you present data think of not just the data you are presenting but what are you measuring really and how you can lift up your company.

You have the data. You have immense power.

Now your turn.

What do you think of the Brand Evangelists Index? How would you have done it better? Got your own heroic stories to share? I would love to know how you used data to alter a company's culture.

Thanks.

PS:

Couple other related posts you might find interesting:

Via

Via

Hi Avinash,

Your article points out a common problem and your solution (making it easier for the business to understand) is exactly what is needed.

I have an alternative but similar method I'd like to share. I use scorecards in a similar manner, scoring 0-5 where 5 is excellent and 0 is poor with 3 being average.

My point here is that a weighted scorecard scale would do the same job as your index, provided extremely satisfied had a higher weighting toward the overall score than not satisfied at all.

I score all metrics, clickstream data, survey data, competitive/market data in order to be able to draw fair comparisons and be able to pinpoint business insights quickly.

By using a scorecard you can quickly pinpoint that your competitors are answering your customers questions better, that your sites usability needs improvement and that your content needs improving in certain areas. All by saying '0' is bad and '5' is best in class.

Much easier than showing graphs of internal figures without much context. It also allows your business to set targets (IE: we need to be hitting 3-5 across all areas) and gives your analyst something to aim at.

Br

Steve

Hi Avinash,

like Steve's comment, I'm also surprised you didn't use a graded approach. But instead of using 0-5 like Steve suggest, I would use -3 through +3 since you want to penalize for negative rates. Keeping Satisfied values is still useful since this can make the balance shift in some cases. Using this method, the results are 30,31,51,14,30,32. Notice how the last entry is now just slightly higher then the 1st and 5th one, accounting for 3 very satisfied and no negative comments at all.

Also, if ever you end up with different number of entries per row (i.e. not everyone voted), you could divide the results by 3*nbr of entries on a row (max possible score) to get a percentage you could compare against each other.

My 2 cents :)

Stéphane

Yo, Avinash!

Another insightful post! Forgive me while I quote you, but I need the reference to phrase my question to you.

"But it is rare that we, “Web Analysts”, actually think, I mean think, about the story we are telling.

I think that’s because that is not our job (I mean that in all seriousness)."

In your opinion, who's job is it then? To tell the story?

Thanks,

Steve

I've been thinking of implementing something like this where I work. I've been tossing around a few different ideas trying to come up with something solid. I think this is a great index. Why should we make a difference for not satisfied and not at all satisfied. On the flip side, if you're very satisfied then that is pretty good even tho you didn't hit the extremely satisfied.

We will index our stats a little differently because we are looking at different stats but it gives us a good start at comparing multiple site's performances.

Steve / Stephane: For me each calculation has to pass the four year old test. Can you explain it to a four year old?

For BEI I would say: "positives results minus the negative results divided by the total responses". If that passes it is a definition if not it has to be thought through again (in the quest to change cultures! :)).

That is the same issue with grading things. Very hard to explain.

As you can imagine I started with grading since that is what everyone else used. But it did not have the effect I was hoping so I did a usability test (three divisions around 25 marketers/directors – certainly not a huge sample) with both. I was able to communicate BEI to almost everyone but had to re-explain grading a few times.

So I switched and have used it since at different companies and it has stood, for me, the test of time and multiple personalities (the nice thing is no one wants to suck, so it works out ok).

[Stephane]

Thanks for suggesting the values, it is very helpful.

Might I make a case again by requesting you to consider the "starkness". Compare this:

30, 31, 51, 14, 30, 32

with this

67, 78, 100, 0, 67, 61

While some of the deltas are similar, the starkness between high performers and low is higher.

The communication element. I am pushing for the other side of the brain: Emotion.

But there is no wrong answer as you can imagine. Different things will work at different companies, my stress overall, as is yours, is on ensuring quality gets recognized after setting a much much higher bar.

-Avinash.

you obviously know your stuff but most the time your posts are just so long… i'd prefer a quicker read. imo.

Avinash,

You literally took Satisfied/Meets out of the equation with your BEI formula.

My initial thought was, okay, if we're not going to use 'Satisfied' in our calculation or accept 'Satisfied' results in our business, why even bother to put 'Satisfied' as an option on the customer's survey as an option to select? I struggled with this. But then I thought that as a client, that would leave me to choose either 'Not Satisfied' or 'Very Satisfied'. Given those two options, as a client, I might feel for the poor sap and give him the higher ranking out of sympathy. (I'm a nice guy.) So, after that analysis, I think we should keep the 'Satisfied' option on the survey and then just let us choose to exclude it from the analysis.

I like your BEI metric. I think it tells a much more accurate story than the other comparison options.

Have a good week!

ALice Cooper's Stalker

This isn't even as harsh as the standard scoring in the service industry.

Hotels, restaurants, just about everybody doing customer service uses "top box scoring."

In this system only the top box, in this case "Extremely Satisfied," is considered a positive review. Everything else is "not satisfied enough."

So when I was a valet and people turned in a response card saying they were satisfied, they might have well checked completely dissatisfied because they were treated the same.

Giving people the range elicits a true response, but knowing what you are shooting for allows you to collapse that range.

I have played around with survey data quite a bit and I think Avinash hits the nail on the head. If you grade the scores too much, the scores get very much into the psychology of how the survey was created. As we know, the wording of questions will often have a greater impact on how many people click "very satisfied" v. "extremely satisfied", but I think we all agree that either of those means a thumbs up. I think the occam's razor solution is not only simpler, but actually smooths the survey bias and gives a more accurate score.

The brilliance is leaving out the satisfactory in the numerator, but leaving it in the denominator, thus penalizing mediocrity. This is not ignoring satisfactory, but emphasizing it!

Hmmm, Looking at that initial graph it is blatant that the first person on my list is Chris and doesn't take any analytics to see that. Then it would be close between Will and Brian. Small numbers in the dissatisfied have no bearing at all.

Avinash,

Interesting approach indeed. I like how your metric suits this particular case seeking for "extreme engagement". It can be definitely useful as a reality check in an environment where optimization has already been done and you want to give an extra push.

However I tend to prefer the weighted approach presented by Steve and Stéphane. I kind of feel that just excluding the "average" option you can loose track of smaller variations. It seems to be a more general an flexible model.

I am actually working on some weighted indexes to gauge diffent aspects, from rich media to brand awareness, and this discussion will certainly inspire my decisions.

Great topic and post as usual.

Thanks,

Jose Davila

I like the fact your are trying to separate the wheat from the chaff here, but some people would not have the budget of Google who could just lay down the $$$ for existing superb talent. I think I would go with a weighted average (much like S. Hamel but you can allow for more variation perhaps) for the normal company multiply the columns by:

Not Satisfied At All: -8

Not Satisfied: -4

Satisfied: 1

Very Satisfied: 4

Extremely Satisfied: 8

Similar to how you already make negative comments negative in your calculation just also weighting heavily positive as highly positive. Then you don't punish people who could have potential with training and mentoring by high scorers, but that is my two cents. I do agree that your method is much fairer than the other two methods you showcased.

Hello Avinash,

Great post, once again!

I think I would calculate the weighted mean.

Say :

Not Satisfied = – 1

Satisfied = 0

Very Satisfied = 1

Extremely Satisfied = 2

# responses = 18

For Jonny, the weighted mean would be (-1 x 0 + 0 x 6 + 1 x 12 + 2 x 0)/18=0,66

Will = 0,83

Chris = 1,83

Guy = 0

Brian = 0,77

Apple = 0,77

This way, the ranking would be different :

#1 Big winner: Chris

#2: Will

#3 : Brian & Apple

#4: Johnny

#5 : Guy

#1 and # 2 would be the same as in your Brand Evangelists Index, but there would be a small difference between Brian, Apple and Johnny.

Obviously, the ranking should depend on the relative value that you give to every evaluation. If for you Extremely Satisfied equals truly deeply passionately satisfied and Satisfied = just ok (mediocrity), then, the ranking would be different…

What do you think ?

Have a great week!

Carmen

"the four year old test" — love it!

One of the sins a web analyst can commit is to be so in love with numbers and formulas that (s)he forgets clarity.

If no actions can easily be taken from a chart, table or report then it's merely data hugging.

Steve D: I was referencing job descriptions and the list of bullet items that you are held accountable for in your performance review. There is a lot expect from you, what is not listed / demanded is that you, Analyst, go out and change the company culture or set higher performance bars.

That seems to be the job of HR / Sales / VP's / HiPPO's.

That is the sad reality.

As you noted in the rest of my post, I am imploring that it be the Analyst's job to push the envelope and do this even if it is not in the job description.

Tim: Adapting it to your unique environment is the way to go. When you are done the test you should apply is simple: "Am I hugely rewarding awesomeness and ensuring that mediocrity is bing called out explicitly, even penalized."

You do that and glory will be yours! :)

Alice CS: You totally got it. I would never recommend taking it out of the survey, you want your customers to tell you they were "satisfied". You just want to take that out of the computation, thus emphasizing great work.

Also see Dave's comment. I think he captures, better than me, what I was trying to do.

Jacob: Ouch.

I think that I would probably never go that far. There has to be atleast some flexibility in the system. If for no other reason then for you, as an Analyst or HiPPO, to tease out improvement opportunities.

But mental note made: Don't take a job as a Valet (no matter how much I like fast cars! :)).

Dave: Brilliantly framed, I loved the numerator and denominator explanation. I feel bad I did not think of it! :)

Technage: I think you/me/they are making things more complicated with scoring than they need to be. See my reply to Stephane/Steve: http://is.gd/lsuR

Also very very important: I'll emphasize separate measuring performance from what to do once you understand performance. There is no recommendation that you should let someone go or give up on someone else based on the performance evaluation.

Each company will decide what to do separately. The BEI measurement simply emphasizes a desire to create "delight".

Carmen: Wonderful suggestion, and thanks for sharing a detailed comment.

Your scoring makes me feel like the religious conversation. I say Buddhism is the best way. You say Christianity. Both peaceful well meaning bot slightly different paths to the same destination. As long as we do our best and get to the destination I don't think She minds (She just wants us to be good and do our job on this planet! :).

A parting thought: when faced with two ways of doing something choose the simpler one. (Occam's Razor!!)

-Avinash.

Some observations.

“How satisfied were you with the presentation and content“

If content sucks normally people will say that presentation is also sucks.

What if content rocks but presentation sucks? I think it all sucks anyway because how you will know that content is great if it was presented badly?

So we are valuing ‘satisfaction’ which is content+presentation (think iPod with noisy music or nice music but dodgy scrolling wheel).

If we assume these are company trainers why are we presenting static picture?

Just in your previous post you noted that looking at https://www.kaushik.net/avinash/wp-content/uploads/2009/02/average-time-on-site-clicktracks.png static picture does not make any sense.

Is Chris Martin stays with BEI=100 for a fifth year in a row? Why can’t he make it 110% or is it BEI formula fault as it is not measuring anything above 100? Is Apple improved two times over last 3 months and Brian Eno failed from 90 within the same interval?

Is it a training content which require rework? What will be the formula to note that?

If we talk to company trainees it is quite easy – you can walk and ask trainees and trainers to get an idea. If it is customers rating pages I doubt anybody explains why he is satisfied. I tried to explain a few times on such pages why I am not satisfied – responses normally are from none to automatic generated ‘thank you’ mail without any follow up action.

Example: http://screenshoots.deepshiftlabs.com/2008/05/10/worse-than-nothing-kingston-technology/

So, such controls (http://support.microsoft.com/kb/242450 – scroll bottom page) are needed not to grab satisfaction but to grab dissatisfaction and REACT on it. This is the only way, to me, to make use of it. For all other pages (without feedback controls) satisfaction (you know this better than me) can be measured as time you are hoping your visitors to spent on it against actual values, and most likely you will never ever be able to ask visitors why. I guess you can just assume, react and re-measure in a loop.

The more traditional approach of considering the responses to the survey as a satisfaction scale (from 0 satisfaction to 4) would lead to similar results after converting to a scale of 0-100: 67; 71; 96; 50; 69; 69 (average satisfaction).

And we could fix this approach to penalize mediocrity, too (=don't consider the middle value of satisfaction -2- in the computations). The results are then: 50; 65; 96; 11; 58; 50. That is: we get the same conclusions we got from BEI. But with three improvements:

1) This approach is more accurate. For example, "very satisfied" and "extremely satisfied" aren't considered equal; BEI does.

2) It's easier to understand: it's a score in a scale from 0 to 100, or the scale of your choosing.

3) As a consequence of 1), it sets a higher bar for performance: to get a 100 score all responses must be "extremely satisfied". That's why Chris Martin gets a 100 in Anivash's BEI and a 96 in my approach.

Again another thought provoking post Avinash,

Although I agree that there should be more contrast in this kind of analysis to highlight areas that can be improved upon, I am not sure that ignoring or even punishing "mediocrity" is necessarily a great way to improve underperforming portions of a business (especially motivating or training staff members as per your example)

I have a fear that analysis being presented like you suggest (especially in the current climate) could possibly breed a culture of blame and short-termism, both awfully destructive things for a business.

As you orginally suggest, analysis should definitely take into account the effects on the business and this has implications on how the data is presented. I'm a bit concerned that your method is too detached from how the information it provides could be used holistically.

In essence I believe in less stick, more carrot.

I think we should look at the past too. Will is in second place (BEI 78). That looks pretty good. Let's give him a hug. But what should we do when he usually (or maybe just at the previous session) gets a 90 (BEI). At once he didn't perform that well, did he? I mean: he can do better than this. Let's ask him if he feels well, or just tell him that he did perform well, BUT also that he can (so should) do better…

Nice of you to come up with such a unique way to computing a person's success. My thumbs up is with you. Great work.

Hi Avinash,

A good post once again!.

However, this time i dont completely agree with you.

You are giving the same weightage to e.g. someone scoring an 18 on extremely satisfied vs someone scoring an 18 on very satisfied. Similarly on the negative side.

While it would be a problem regarding the Accuracy definitely, there is another reason for me to strongly make this point.

My experience of rating presenters says that its very rare for someone to rate you Extremely satisfied vs someone rating you Very satisfied. We generally stick to the 'safer' very satisfied or satisfied options unless we are ACTUALLY saisfied with the person's performance which is rare. so a big difference between a person scoring a very vs extremely satisfied.

i agree with Steve or Stephane that it should be a rated performance with the higher score definitely getting a higher weightage.

Cheers

Roopam

Hi Avinash,

I think you managed to present data in a much more attractive way, but the quality of data is still the same. What do we know about the quality of the data? What's the context? What were the outcomes?

Can we judge people's performance based on the opinion of other people?

We need more context before we can evaluate. Otherwise, a nicer way to present data makes us forget that the data is still the same.

What if Guy had to present on a very difficult/controversial subject? What if he was the last one to present, and people were just too tired to give him excellent grades? What if two people in the public just hate Guy?

And if we want to know the true evangelist power, shouldn't we also take into account the outcomes?

What if Brian managed to leave "extremely satisfied" two very influential bloggers who write about Brian's presentation on their blogs? Isn't that better than just leaving "extremely satisfied" 15 people who end up writing nothing on their blogs, as could be the case with Chris? (it's less probable, but it could happen)

I remember at school, the best teachers weren't always the ones to get the best grades. Why? Because audience is usually the mediocre element, not the teacher. And mediocre people tend to prefer mediocre people. But do we want our brand to appeal to the mediocre or to those who excel? It depends on the product or service we offer, of course.

Anyway, just some thoughts that come to me after reading you… that's why I like reading you so much: you make me think! :)

Thanks!

Great post Avinash and I like your BE index. We have similar 5 point scale surveys and I have been using the so called grading scale – the weights I use go from -2,-1,0,1,2 (I also neutralize/discard the 'Satisfied' scores by giving it a 0). Just for fun, I used my current system against your data and I got the scores 12,15,33,0,14,14. Interestingly, we both have Guy as a 0 – but Jonny does worse in my computation than in yours and Apple does better in mine than in yours:-). Out of curiousity when I look the data, Jonny has more 'positive' response relative to apple (12 vs 11), but apple has more 'very positive' response relative to Jonny (3 vs 0).

Anyway, this was a fun excercise and a good post to read — Amen to not using averages for analyzing results from a survey.

Why?

So we don’t have to do that.

– very insightful.

The problem with your model is that if Will has 15 very satisfied and 3 extremely satisfied, his score will be the same as Chris'.

A weighted average, as mentioned above, would be more accurate. I think this works well for some simple analysis but I'm sure YouTube video reviews and Amazon product reviews use a much more complex statistical approach.

Quick question Avinash

Where would you draw the lines on a 10 point scale (Net Promoter score type surveys) for Brand Evangelists. Would it be 1-4 are negative, 5-6 indifferent, and 7-10 are positive? Or would you go 1-3, 4-7, and 8-10. Just curious.

I like it! As I started reading, I was thinking "Net Promoter! Net Promoter"…and then that's what you credited as inspiration. So, why not just use and call it the Net Promoter Score for the presenters? If it's within a company that has made the "Customer Satisfaction" to "NPS" switch, then everyone would get what you're doing. And…every four-year-old you explained it to would be one step closer to landing a job in marketing!

Igor: My assumption is that all other rules of analytics apply. You have to trend the data. You have to look see other circumstances, some of which you mention in your email.

In context of measuring that particular conference data my hope was to provide a effective way of identifying quality. I would do the same with content on a site or for data from a sat survey.

I think you might also be mixing the "why" with the "what". I was analyzing what happened. Not why it happened. It is likely that Chris had the best content and Guy the worst. That's to come.

All my analysis would give are starting points to ask the right focusing questions.

Xavier: Thank you. I can live with that.

But as you say this would only work if the "traditional" method was evolved / changed to reflect the Performance Management message that I was advocating.

I have not seen that happening, thought strains of what I have said here have existed for a whole.

Pere: See the thought to Igor above about all other rules of common sense still applying.

You make good points, but I have to say at some level they might be sub optimal ways out of a hard conversation. With Guy or anyone else.

I'll respectfully disagree with you on your last point.

Teacher example is not quite right here. In this case (take my Marketing talk example or website content example I suggested) I value what the impact / outcome was. If all of the audience is out to get Guy / Apple / Me then who cares. We need to understand it, but we need to fix it.

It is insulting the intelligence of the audience to suggest that they don't have the mental capacity to give honest ratings. If they are my customers (current or potential) I take their feedback seriously. Then I fix my marketing team.

Sid: When dealing with the ten point scale I have used 1-5 negative. 6-7 indifferent, 8-10 positive. So a slight variation on what you had.

Overall remember I am deliberately trying to set the bar much higher, hence the broader negative and indifferent range.

Tim: The prime reason I did not use Net Promoter (besides the fact that I did not want to get used by a Harvard Professor!) was the fact that NPS is actually even more aggressive than I am being with the BEI.

I believe in setting a high bar but I am balancing a bit in favor of employees (though in this whole post and comments you'll see I am egregiously biased in favor of max customer impact).

-Avinash.

Hi Avinash,

I'm back to your blog after a long hiatus, and it's made my life so much happier!

I think that weighted averages are extremely intuitive because they correspond to the physical concept of "leverage" – the farther you are from an axis, the more power you have. Try pushing a door open from its middle, then from 1 inch away from the hinges, to see this in action.

I just wish there was a better term for this than "weighted average," which causes my non-mathy friends to glaze over.

all the best,

Gradiva

Hi Avinash,

A couple of things….

I really dislike your choice of scales as you do not have a true midpoint and you actually bias toward the satisfied. 2 negative scale ratings and 3 positive scale ratings. (The midpoint should be a neutral, not positive midpoint.)

Now let us talk about the BEI, in theory it sounds great, it adds clarity to a bad analysis. In actual practice, it probably adds an unnecessary layer of complexity.

Example: “Hi Boss (AKA HIPPO) here is the customer survey data, I used a new formula to evaluate our staff, it is call the Brand Evangelist Index it is based on { [ (Very Sat + Ext Sat) – (Not Sat + Not At All Sat) ] / # Responses } *100….” The eyes of the HIPPO glaze over as you try to explain the formula and the benefits.

A better tool would be to use top 2 box analysis. Here is why, it tells the story of those employees that excelled and provided “very satisfied/extremely satisfied” level of service, (HIPPOs can understand that). How do you explain this to the HIPPO, you say “this is the percentage of Jonny’s customers that were very or extremely satisfied (in this case 67%) as compared to Chris who did a great job as all his customers were very or extremely satisfied (100%). However, Guy did not do very well; only 11% were very or extremely satisfied. Based on the data fire Guy and give Chris a raise.”

How does top 2 box map to BEI? Generally very well.

Jonny BEI-67 / top 2 box-67%

Will BEI-78 / top 2 box-83%

Chris BEI-100 / top 2 box-100%

Guy BEI-0 / top 2 box-11%

Brian BEI-67 / top 2 box-72%

Apple BEI-61 / top 2 box-61%

Finally, just because your customers are satisfied does not make them an evangelist (def. an enthusiastic advocate). The title of your metric/kpi might be slightly misleading.

Thanks

Top box sounds more sensible to me considering I am still a bit bewildered that anyone needs any type of scale to measure these examples at least.

They stand out like a sore thumb and if you really have to spend a lot of time and work with complicated mathematics you are simply making it ten times more difficult than it should be. (in my humble opinion)

Really great post Avinash, above all a rallying cry for Analysts to do more than just pass the numbers on.

It is interesting to read through the comments and see a focus on the mathematics and older models to make the argument that what we have works well already.

That focus is missing your rallying call to action, to set higher standards, but unsurprising as our job is to focus on the numbers and less beyond.

Carmen's methodology reflects the core accentuate both the positive and the negative.

The top box score does not do the latter and in my opinion a shortcoming of that methodology (your main message here).

Love the debate, thanks for moving the conversation forward.

Martin.

Hi Avinash,

Thanks. I would do something similar to Carmen, but adopt a Likert 5 point scale:

1 – Poor

2 – Below Avg

3 – Avg

4 – Above Avg

5 – Excellent

Adopting this, I would calculate the weighted score as a percentage of the max possible score of 18×5:

Johny: (0x1 + 0x2 + 6×3 + 12×4 + 0x5) / (18×5) = 73%

So, the results we land up are:

Score

Johnny 73%

Will 77%

Chris 97%

Guy 60%

Brian 76%

Apple 76%

As you can see, the inference is similar to your own. Hope this helps.

– Koushik

I used this first time i need to measure quality of presenters 10 years ago, i think it is obvious that negative score is negative (-) and positive is positive (+).

After some time i have improved it far more – some people are positivistic, some are negativistic. If someone who ranks everything 100% gives you 90%, you are poor. If you recieve the same 90% from someone who ranks everything else bellow 50%, you are great.

So i have calculated their ranks for all questions and then calculate their overal index, which i use to recalculate the real value of every of their rank (weighting values in statistic terms)

Also this cannot be used as the only measurement of presenter quality – if a Microsoft guy would present Microsoft on the Linux conference, he would definitely rank very poorly, even if he would be awesome.

Avinash,

Interesting post. Having a background in finance, I agree with a weighted average method. I like the conversations this blog creates…Thanks and keep coming!

Chris

[…]

Quelque part en mars j’ai commenté un article sur son blogue. Brièvement, il présentait la problématique suivante : suite à un événement, on demande aux participants leur appréciation sur la prestation du conférencier/formateur. Comment utiliser les donnes recueillies pour évaluer la personne et éventuellement la recommander pour des événements futurs? Il propose une méthode pour calculer un Brand Evangelists Index (BEI). Pour les curieux, vous pouvez lire le tout ici (en anglais).

[…]

I see you have good taste in music. Thanks for the insight!

S P: You are the only person who picked up the clue about music in this blog post!

I am happy that you did. :)

-Avinash.