Absolute numbers are no very helpful (we had 459,245 unique visitors last month). Trends we have come to realize are better (Dec: 459,249 Nov: 591,067 Oct: 489,419). But there are customer interactions on the websites that results in outcomes for your company that yield trends that are rather difficult to decipher and translate into action.

Absolute numbers are no very helpful (we had 459,245 unique visitors last month). Trends we have come to realize are better (Dec: 459,249 Nov: 591,067 Oct: 489,419). But there are customer interactions on the websites that results in outcomes for your company that yield trends that are rather difficult to decipher and translate into action.

One factor that is not appreciated enough is that every metric / KPI (Key Performance Indicator) that you report out of your web analytics tool (or indeed from your ERP or CRM or Data Warehouse) tends to have a natural “biorhythm”, i.e. those metrics / KPI will fluctuate up or down and change due to “natural occurrences” that just happen (I can see some of you cringe! :)).

These biorhythms are hard to understand, harder still to predict and since many of us live in the Puzzle world rather than the Mystery world we spin our cycle like crazy to understand the numbers to “explain” them to the management so that they can take some action. Imagine getting a daily / weekly trend and it goes up and down and you have no idea what the heck is causing it, even after you have done your damdest to isolate all the variables.

These biorhythms are hard to understand, harder still to predict and since many of us live in the Puzzle world rather than the Mystery world we spin our cycle like crazy to understand the numbers to “explain” them to the management so that they can take some action. Imagine getting a daily / weekly trend and it goes up and down and you have no idea what the heck is causing it, even after you have done your damdest to isolate all the variables.

The result of these natural biorhythms is that it causes Analysts and Marketers to do analysis and deep dive where none is necessary, it causes some of us to look “bad” because we can’t explain the data, and it causes a lack of faith the the ability of data to provides insights.

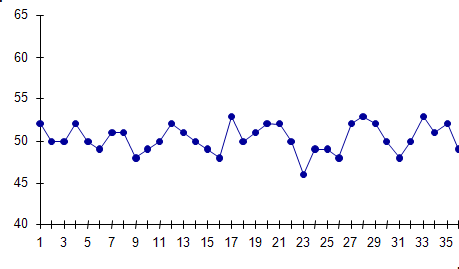

Here is a great example that illustrates the issues:

It does not really matter what the numbers on this graph are and what the x-axis is. As you look at this at point 7 or 17 or 25 would you know what the trend is telling you and if it is a cause for concern or things are ok and you don’t need to take any action or the high points are causes for celebration?

One wonderful tool / methodology that I have found to be wonderfully helpful in separating signal from noise is from the world six sigma / process excellence and its called Control Limits (or Control Charts). Simply put control charts are really good at applying statistics to assess the nature of variation in any process. Translated into the biorhythm problem in relevant situations control charts can help trigger deep analysis and action.

Control charts were created to improve quality in manufacturing situations (or others like that) but they work wonderfully for us as well.

There are three core components of a control chart. A line in the center that is the Mean of the all the data points, a UCL (Upper Control Limit) and a LCL (Lower Control Limit).

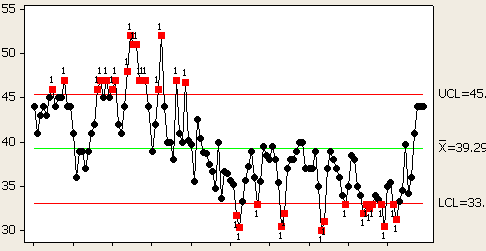

Here is what a trend looks like with control limits overlayed on top:

What are Control Limits really?

Let us understand what you are looking at.

Mean (X): The green line above. A statistically calculated number that defines the average amount of variation in your KPI trend. For example for the above process it is 39.29.

UCL (Upper Control Limit): A statistically calculated number that defines the higher limit of variation in your KPI trend. In the example above it is 45.

LCL (Lower Control Limit): A statistically calculated number that defines the lower limit of variation in your KPI trend. In the example above it is 33.

The control chart above is illustrating a natural biorhythm in the KPI trend that is in between the two control limits, these are points that show natural variation in the metric and tentatively are not causes for doing anything, even though as you can clearly see they vary quite a bit from one data point to the next.

The massively cool thing is that it shows all the points in the trend, think of it as days or weeks or months, when you should have taken action because there was something unusual that occurred. It won’t, sadly, tell you what the heck happened, but it will tell you when you should use your precious time to dig deeper. Isn’t that awesome? Think of all the time you would have wasted solving the Puzzle behind the data points below the Mean, which look like “problems”.

So how do you compute these wonderful Control Limits (UCL & LCL)?

The general rule of thumb for calculating control limits is:

(Average KPI Value) +/- (3 x (Standard Deviation))

Control limits are calculated 3 standard deviations above or below the mean of your KPI data values. They are not assigned, but rather calculated based on the natural output of your data. Anything within the control limits should be viewed as expected variation (natural biorhythm). Anything outside of control limits warrants investigation. Not only that but if a series of data points fall outside the control limits then it is a bigger red flag in terms of something highly impactful going awry.

In a world where we are tons of metrics, where every dashboard has fifteen graphs on it, control limits are extremely helpful in leveraging the power of statistics to be the first filter of when you should dig deeper or look for a cause. If your metrics and trends have variations from day to day and week to week this is a great way to isolate what is “normal” and what is “abnormal” in the trend.

Control charts also scale very well. It would be easy if for every metric you have there is a clearly established Goal that you are shooting for. That goal can tell you how well, or not, you are performing. That is rarely the case for the massive deluge of metrics you have to deal with. It is scalable for you to apply control limits to all your trends.

Practical considerations in use of control charts (limits):

- Like with all things statistics the more data points you have the better your control limits will be, it would be hard to do a control chart that makes sense with just five data points (you can create it, it just won’t be very meaningful).

- Control limits work best with metrics / KPI’s where it is a bit easy to control for the impacting variables.

For example it would be less insightful to create control limits for your Overall Conversion Rate if you do Direct Marketing, Email Campaigns, Search Engine Marketing (Pay Per Click), Affiliate Marketing and you have loads of people who come directly to your site. There are too many variables that could impact your trend.But you can easily create control charts for your Email Campaigns and PPC Campaigns or Direct Traffic and it will be very insightful because the variable is just one (or just a couple) and you will find excellent trigger points for performance and in turn analysis and in turn action.

- You do need to be able to understand a little bit of statistics and have some base knowledge around standard deviations etc so that you can leverage this optimally but also explain the power of what you are doing to your Senior Executives.

Practical example of using control limits:

The graph above shows a potential sample conversion rate of a website. Without the Red (UCL) and Blue (LCL) lines it is harder to know each month how the performance of direct marketing campaigns is faring. It is easy to know in Jan 2005 that performance was terrible. It is much harder to know that between March and July statistically there was nothing much to crab about even though the trend goes up and down.

This last point is important, anyone can eye ball and take action on a massive swing. What stymies most Analysts is separating signal from noise for non-massive swings in the data.

Consider using Control Limits on your KPI’s such as cart and checkout abandonment rates, you’ll be pleasantly surprised and happy at what you learn (as will your bosses).

Any decent statistical software will automatically calculate control limits and create these graphs for you. Minitab is the one that is used a lot by folks I know (though it is a tad bit expensive). We have also used our standard business intelligence tools to compute control limits for us (Brio, Business Objects, Cognos, MicroStrategy etc). You can also always simply jury rig excel to compute the limits for you (perhaps a reader of the blog can create a template that I can post here for everyone’s use; Update: Clint Ivy to the rescue! Here's his blog post and here's the wonderful spreadsheet he's created for us. Please download the spreadsheet and plug in your own numbers.).

You can also read a little bit more about Control Charts and try two control chart calculators at SQC Online. In the What section give the control chart calculator for variables a spin.

This is a long and complex post but I hope that I have communicated to you the power of control charts, it is a bit dry and take a small bit of knowledge and patience but it is so powerful in helping your analysis specifically when it comes for separating signal from noise.

Signal -> Insights -> Action -> Happy Customers -> Money, Money, Money! : )

What do you think? Have you used control charts? What metrics do you think they will work best with? Should web analytics vendors include the ability to do control charts as a standard option in their tools? Is none of this making sense?

Please share your feedback and critique via comments.

[Like this post? For more posts like this please click here.]

Via

Via

This is brilliant post Avinash, it addresses a fundamental problem from having KPI spam in businesses and not knowing where to even start looking. We don't use control charts at all today and that is going to change tomorrow. Thanks for providing this tip which is quite excellent and easily actionable.

All of a sudden, I'm at the University of Wisconsin in a Statistics class in 1986! Good job.

Hi Avinash,

Quite interesting! This is new to me and looks very useful; I am going to dig into it right now (thanks for screwing up my weekend ;-) ).

I would like to suggest that readers also read Gary Angel's blog today (http://semphonic.blogs.com/semangel/). I sense a very interesting debate in the making!

Great post Avinsah. Not sure about the:

Average KPI Value) +/- (3 x (Standard Deviation))

Does this take into factor Longtail? One argument is KPIs don't factor long tail considerations. This would mean that you are revolutionalizing the way companies should set up their threshold is it?

Bummer — calculated the control limits and found that this particular measure varies so widely that all variability can be described as "natural", even though we've clearly identified a seasonal variability and identified a cause for it. Still, a very interesting post from a very useful blog.

Hi Avinash,

another idea I'd put on the table is a statistical technique called Winsorization (i.e. "winsorize the data"), which uses percentiles instead of standard deviations. As such, it's generally more resistant to outliers (extreme data points).

The basic gist is that you throw out the top/bottom 5th or 10th percentile of your data, then you calculate your regular descriptive stats based on the remaining data (i.e. averages, std, etc.) For datasets that have wide fluctuations, this is a simple but very practical way to "quiet" things down and focus on the signal while omitting much of the noise.

More info here: http://www.itl.nist.gov/div898/software/dataplot/refman2/auxillar/winsor.htm

On a related note, I'd welcome some discussion about using medians vs. means. Unfortunately most WA apps don't provide this data (I believe WebTrends may be the exception).

cheers

Dave

Brian: Control Charts were originally popularized by the Six Sigma efforts and applied towards reducing defects in manufacturing processes. In as much they are not right for everything (hence my stress on isolating for multitudes of causes and using it for more "process" oriented metrics such as Cart & Checkout Abandonment rates).

I am not sure what top secret NASA data you are pumping into this :) but if you have segmented the data and it still explains "all variability" then in the past I have tried to put in more data points to see if it helps. I am not sure if it will help in your case. Thanks though for trying it, it is great to hear feedback from others.

Anonymous: As sketched in the post it would accommodate for all the data points including the outliers. But when that is observed in a metric / process I have typically done what the wonderful Mr. Morgan suggests in his comment directly above. If relevant in your case please try that (it is not just a matter of ignoring or not the top/bottom percentiles, I would encourage you to think of that decision in the context of the business process / Metric / KPI that you are applying the control limits to, i.e. what are you measuring and what is the business context of the outliers).

Dave: As always thanks for your comment, it is wonderfully helpful and adds to the quality of the conversation. Gracias!

-Avinash.

Another suggestion for a more robust calculation for upper and lower control limits are using quartiles:

Q1 = 25th Percentile

Q2 = 50th Percentile, a.k.a. Median

Q3 = 75th Percentile

LCL: Q1 – 1.5*IQR (IQR stands for Inter Quartile Range, IQR = Q3 – Q1)

UCL = Q3 + 1.5*IQR

For extreme outliers you can replace the 1.5 IQR mild outlier multiplier with a 3.

http://en.wikipedia.org/wiki/Outlier

Thanks for a great post, Avinash.

First, excellent post. In my opinion, the biggest problem in web analytics right now is an understanding of how to interpret the data and what actions to take. I think this sort of representation goes a long way to help the former.

While I'm not that familiar with TQM, but I have been thinking about how to apply statistical techniques to web data. I had actually started down the path of creating a standard deviation based graph.

One note, I think the excel spreadsheet that you posted a link contains an error. It has the formula for the UCL and the LCL as only one standard deviation from the average. I believe the correct formula is 3 times the standard deviation. Although I like the idea of showing one and two standard deviations in the graph as well as the UCL and LCL. Another thing the spreadsheet should take into account is that if the LCL is a negative value then it should be set to zero.

Anonymous,

the spreadsheet that I posted only uses one Standard Deviation because using 3 would have set the LCL to 0.1 – an impossibility for page views per visit. And even using 2 standard deviations put the LCL below 1 which should also be an impossibility.

Therefore, I only used one std dev. so that my LCL was the next minimum above the floor (1).

To your comment, about negative LCLs – you could easily update the formulae to be an IF statement so that zeros are returned if the LCL is negative.

-Clint

I would add to Wendi's comment about Quartiles, IQR and outliers. Using box plot sometimes communicates very easily the most important numbers: median, ranges, extremes, outliers, trends (by being skewed one way or the other), etc. The only problem is that no we tool provides this kind of graphing out of the box and we have to play around manually with the data.

Check out http://en.wikipedia.org/wiki/Box_plot

S.Hamel

http://immeria.net

There is a problem with your example and with the whole six sigma in general: it does not easily account for growth. For example, in your last example you have a trended conversion rate, that is, a simple linear regression would tell you that the conversion rate is increasing with time. By setting an upper limit you are effectively stifling growth.

I've had rather negative experiences with people generalizing six sigma approaches beyond what they should.

Adelino de Almeida

adelino.typepad.com

Adelino,

I think that if you are slavish to the six-sigma framework and TQM then yes, that's a valid point because what you are trying to do is manage a process within specific limits – where an outlier, either positive or negative is bad thing.

The interesting thing that Avinash has done is to take some statistical tools from a very statistically mature framework (six sigma) and apply it to web analytics data.

Because the UCL and LCL are calculated on the fly, with the entire data set, I don't see how it limits or ignores growth, what it does is pin-point unusual events that need your attention.

Furthermore, if you are concerned about the impact of historical trends on the control limits, then you could limit your calculation of the standard deviation to the last N periods instead of the entire data set.

My 2 cents worth…

-Clint

Adelino: I concur that a general application might be imprudent. In this case, the conversion rate example, it is not that we are setting a "upper limit" in the same way as we set goals. We are simply using a statistically generated "line" to help us understand metrics behavior. It is certainly not the upper limit of where we want the conversion rate to be (that would be 100% !), it is not a objective / company goal.

My hope is that people will take this recommendation and use it as a mechanism to trigger deeper analysis when they swim in a sea of data that all fluctuates all the time. That's it, nothing more – nothing less. Though I can totally see how it could be misapplied.

I am a fan of your blog and I appreciate your feedback very much. Thanks.

-Avinash.

Avinash, this is really a thoughtful post and I really admire your reserach in this area.

In my opinion, this concept would be perfect for LEADING KPIs instead of LAGGING. Most of the management dashboards I have seen over the past few years, lacked LEADING INDICATORS and in the absence of that; it's very hard to take proctive decisions by looking at the trend.

Most of the companies I have worked with simply relies on MOVING AVERAGE or to some extent WEIGHTED AVERAGE to compute the TREND but still; the picture is not clear because; they are not aware with the BASELINE figures and until and unless you have MIN and MAX MEAN values; it's hard to seperate the SIGNAL from the NOISE.

CONTROL CHARTS are worth trying and I would definetely play with it.

Avinash:

I applaud your effort in bringing some statistical rigor to the analysis of website performance, six sigma is a great tool chest to begin with.

The only aspect that I stress is that these tools provide great insights as long as they are applied correctly.

For instance, I've just posted about correlation and how it is normally mis-interpreted. Another great advantage of statistical/stochastic methods is that we no longer run our businesses out of averages but, rather, from expected ranges.

In any case: I am a great fan of your blog and I think this post is a great step towards bringing rigor into performance analysis.

Adelino

adelino.typepad.com

I've been playing with Box Plots in Excel 2007 and I just posted a step by step technique on my blog.

Check it out at http://immeria.net/2007/01/box-plot-and-whisker-plots-in-excel.html

S.Hamel

http://immeria.net

Avinash, Thanks for the web forum. As a user of TQM methods for ten years I find it helpful to eliminate outliers in the data set by applying UCL and LCL, remove the data beyond 3 sigma and recalculate the limits, repeat until no outlier exists.

All special cause events need to understood, as you state. Control chart rules are many but are statistically sound.

Hi –

I'm a relative newbie to the Analytics world but an avid fan of this blog. I'm soaking up the knowledge! Thank you to Avinash, et. al.

I have tried this application of upper and lower control limits on the rate of change of email opt in rates per week. I found I needed to eliminate the upper and lower 10% to get a reasonable standard deviation by which to examine a week's change from the previous week but otherwise it seems to work out pretty neatly.

Does anyone see an issue with using the control limits for this particular KPI? I'd love some feedback before I put this before my boss. Don't want to get the cross-eyes from him!

Thanks,

Emily

Very intresting article. We are implementing CMMI and one of the findings is : Specification limits for sub processes based on natural control limits can be identified" Can somebody help.

An interesting article.

However, most data in the real world don't adhere to mean-related statistical techniques since they fluctuate a lot for very genuine reasons – which mean cannot capture since its a very basic indicator.

An alternative method would be to fit the data onto a straight line using least square fit and use the slope, rather than the mean, to serve as the basis for control limits. This would take into account the growth/slowdown of products too.

Also, 3-sigma control limits are for eliminating outliers that fall in the outside 2% (meaning, the control limits contain 98% of the data between them). Applying a 2-sigma limit eliminates the outside 5% (meaning, the control limits contain 95% of the data between them).

For those interested or knowledgeable of statistical distributions, the formulae are based on the assumption that the data follows a normal distribution (again, this is not case in most real-world data, though they can be massaged to fit into that assumption).

Dilip, to add to your response, if there are a lot of fluctuations and they have a genuine reason, then this fluctuation is considered to be "normal". The control limits are calculated based on the distribution of the data around the mean. In a lot of instances, the data is not perfectly normal, but a multlimodal distribution, these in most instances will be a group of smaller "normal" distributions. The process owner will be able to identify these triggers easily, this should help regroup the data into different distributions and then monitor them using control charts. e.g the day of the week can be an indicator for hits on a website, or the time of day etc.

One short coming of fitting a straight line is ths normalizes the data in a very rough manner, a second degree polynomial might work better.

— This is an answer to some questions regarding getting box-plots etc out of the box, try using JMP, this is a statistical tools made by SAS, it is very user friendly with a lot of built in features.

Sorry to stir up this old thread Avinash, but I just can't resist b/c I ran into a situation where I thought this would be useful.

I read the part about this in your book and this blog post (including all comments) again and basically had/have three more questions:

1) Somebody mentioned using the slope or the most recent periods to filter out the effect of growth. Obviously if you take the data from the last 10 years the average might not be a good idea, but the data from the last year would be more insightful – that is if there's enough data during that period…thus choosing only the last periods over choosing all the historical data is mostly a balancing act?

2) The use of standard deviations seems to be exactly the same way as when they're used with other metrics. 3 SD's = 98% of the data, 2 SD's 95% of the data…and 1 SD would be roughly 68% of the data? Did I understand this correctly?

3)You mention that having a small sample size can pose a problem (as usual). Would it be right to assume that the control limits would still be "correct" no matter how many data points you have, but if you dont have enough, they're just not very insightful as the standard deviations would be very big and thus the control limits would be very big, too?

I hope my first 2 questions can be replied to with a simple yes (I hope!), but the last one really got me wondering. If the sample size is too small/too few data points, what exactly would happen that would make it harder to take action?

And is there a way to find out how many data points one would need for these control limits to be helpful? Something like a statistical significance test?!

Or would somethign like a statistical significance for the control limits have to be computed?!

Hi Avinash, I love these. Can I connect with you. I have questions on xbar R chart and was looking for 1:1 mentoring.

Avinash,

Great post. Just an update:

The link to Clint's blog post is broken. It is now at http://blog.instantcognition.com/web-analytics/2007/01/18/using-constants-in-excel-charts/

Andrew

This is a great post. It really helped me in my understanding and translating the application of control limits to everyday business processes.

Great post Avinash!

I recently took a stats class as a refresher at my local community college (unfortunately Jack Black didn't show up) and as I was reading through your post I was thinking about "Quartile Ranges" as Wendy Malley mentioned above:

LCL: Q1 – 1.5*IQR (IQR stands for Inter Quartile Range, IQR = Q3 – Q1)

UCL = Q3 + 1.5*IQR

Thanks Wendy for the insight on how the IQR relates to UCL & LCL!

Thanks Avinash for the great post!

I am in the midst of black belt training and am feeling my way through its application to web statistics. This article and conversation was very helpful.

One thing I did not see noted, though, was the difference between UCL and USL – and LCL and LSL. UCL and LCL are determined by the process. The web statistics in this case. USL and LSL are determined by customer expectation. They are the specification limits rather than the control limits.

Control charts are designed to compare the actual process variation to what is allowed by customer expectation. With web stats, I would think customer expectation (with the business owner being the customer) would be bigger is better for traffic – with an upper specification of the ability of the server and the business to handle the traffic) and less is better for bounces (with a lower specification no less than 0). So, the customer's expectations – the process specifications – would not limit growth.

DMAIC – Define, Measure, Analyse, Improve, Control

In the measure phase, you have to first make sure you are in a state of control. If you are in a growth state, any change you implement cannot be truly determined to be caused by your change. If you are in a state of control, control charts tell you if you're process operates within the customer's expectations (specification limits), if it is centered between the two limits, and if they are on target (the customer's expectation).

Then you make a hypothosis, implement a change, and do statistical tests to see if the change made a statistically significant difference. Was the increased statistic due to random chance or special causes (whichever change you implemented.) This is why you can't be in a growth state to use six-sigma to identify opportunities for growth.

In any case, this article and the discussions behind it helped my get my head around how I will use control charts with my web stats. Thanks!

A very interesting post, attractive data visualization is half the battle, bored management is often not willing to analyze it.

However, if we present them a chart with control limits, it is definitely easier for them to understand it, and this is a business analyst task.